homebrew mac 安装

最近由于老的homebrew网站被墙,安装的话需要重新指定到国内的清华镜像来进行安装。

注意在安装脚本之后最好更新一下操作系统,然后再在AppStore下载一个XCode。这样方便管理很多第三方插件。

下载安装脚本

https://raw.githubusercontent.com/Homebrew/install/master/install.sh

可以翻墙然后下载安装脚本install.sh

下载完之后可以将脚本中的

BREW_REPO修改为当前我们的镜像位置

BREW_REPO="https://mirrors.tuna.tsinghua.edu.cn/git/homebrew/brew.git"

修改完成之后在运行 sh install.sh 安装即可

H5 平台几种方案

1. 使用百度H5

买一台主机配置一下,使用起来比较方便

2. 鲁班H5平台

有源码,但不适用于商用

3. swiper

swiper是一个基础框架库,可以用这个来自己手写

4. taro

https://taro.aotu.io/

框架是京东下面的一个很有意思的框架,可以同时做app、小程序、H5多端,同时提供了比较好的案例可以直接下载使用

使用react+redux实现,比较有意思,可以研究一下。

5. wechat-h5-boilerplate

https://github.com/panteng/wechat-h5-boilerplate

可以用这个手写,体验效果还是比较好的

可以考虑使用

6. iSlider

也是一个可以自己实现的插件工具

一篇博客介绍相关H5运营内容

http://www.ptbird.cn/h5-tool.html

多端开发框架汇总

https://blog.fundebug.com/2019/03/28/compare-wechat-app-frameworks/

腾讯开源

The Django Speed Handbook: making a Django app faster

这篇文章真的写的非常好,讲解到了django是如何一步一步做性能优化的,可以优化的点还是很多的。

Over the course of developing several Django apps, I’ve learned quite a bit about speed optimizations. Some parts of this process, whether on the backend or frontend, are not well-documented. I’ve decided to collect most of what I know in this article.

If you haven’t taken a close look at the performance of your web-app yet, you’re bound to find something good here.

What’s in this article?

Why speed is important

On the web, 100 milliseconds can make a significant difference and 1 second is a lifetime. Countless studies indicate that faster loading times are associated with better conversion-rates, user-retention, and organic traffic from search engines. Most importantly, they provide a better user experience.

Different apps, different bottlenecks

There are many techniques and practices to optimize your web-app’s performance. It’s easy to get carried away. Look for the highest return-to-effort ratio. Different web-apps have different bottlenecks and therefore will gain the most when those bottlenecks are taken care of. Depending on your app, some tips will be more useful than others.

While this article is catered to Django developers, the speed optimization tips here can be adjusted to pretty much any stack. On the frontend side, it’s especially useful for people hosting with Heroku and who do not have access to a CDN service.

Analyzing and debugging performance issues

On the backend, I recommend the tried-and-true django-debug-toolbar. It will help you analyze your request/response cycles and see where most of the time is spent. Especially useful because it provides database query execution times and provides a nice SQL EXPLAIN in a separate pane that appears in the browser.

Google PageSpeed will display mainly frontend related advice, but some can apply to the backend as well (like server response times). PageSpeed scores do not directly correlate with loading times but should give you a good picture of where the low-hanging fruits for your app are. In development environments, you can use Google Chrome’s Lighthouse which provides the same metrics but can work with local network URIs. GTmetrix is another detail-rich analysis tool.

Disclaimer

Some people will tell you that some of the advice here is wrong or lacking. That’s okay; this is not meant to be a bible or the ultimate go-to-guide. Treat these techniques and tips as ones you may use, not should or must use. Different needs call for different setups.

Backend: the database layer

Starting with the backend is a good idea since it’s usually the layer that’s supposed to do most of the heavy lifting behind the scenes.

There’s little doubt in my mind which two ORM functionalities I want to mention first: these are select_related and prefetch_related. They both deal specifically with retrieving related objects and will usually improve speed by minimizing the number of database queries.

select_related

Let’s take a music web-app for example, which might have these models:

# music/models.py, some fields & code omitted for brevity

class RecordLabel(models.Model):

name = models.CharField(max_length=560)

class MusicRelease(models.Model):

title = models.CharField(max_length=560)

release_date = models.DateField()

class Artist(models.Model):

name = models.CharField(max_length=560)

label = models.ForeignKey(

RecordLabel,

related_name="artists",

on_delete=models.SET_NULL

)

music_releases = models.ManyToManyField(

MusicRelease,

related_name="artists"

)

So each artist is related to one and only one record company and each record company can sign multiple artists: a classic one-to-many relationship. Artists have many music-releases, and each release can belong to one artist or more.

I’ve created some dummy data:

- 20 record labels

- each record label has 25 artists

- each artist has 100 music releases

Overall, we have ~50,500 of these objects in our tiny database.

Now let’s wire-up a fairly standard function that pulls our artists and their label. django_query_analyze is a decorator I wrote to count the number of database queries and time to run the function. Its implementation can be found in the appendix.

# music/selectors.py

@django_query_analyze

def get_artists_and_labels():

result = []

artists = Artist.objects.all()

for artist in artists:

result.append({"name": artist.name, "label": artist.label.name})

return result

get_artists_and_labels is a regular function which you may use in a Django view. It returns a list of dictionaries, each contains the artist’s name and their label. I’m accessing artist.label.name to force-evaluate the Django QuerySet; you can equate this to trying to access these objects in a Jinja template:

{% for artist in artists_and_labels %}

<p>Name: {{ artist.name }}, Label: {{ artist.label.name }}</p>

{% endfor %}

Now let’s run this function:

ran function get_artists_and_labels

--------------------

number of queries: 501

Time of execution: 0.3585s

So we’ve pulled 500 artists and their labels in 0.36 seconds, but more interestingly — we’ve hit the database 501 times. Once for all the artists, and 500 more times: once for each of the artists’ labels. This is called “The N+1 problem”. Let’s tell Django to retrieve each artist’s label in the same query with select_related:

@django_query_analyze

def get_artists_and_labels_select_related():

result = []

artists = Artist.objects.select_related("label") # select_related

for artist in artists:

result.append(

{"name": artist.name, "label": artist.label.name if artist.label else "N/A"}

)

return result

Now let’s run this:

ran function get_artists_and_labels_select_related

--------------------

number of queries: 1

Time of execution: 0.01481s

500 queries less and a 96% speed improvement.

prefetch_related

Let’s look at another function, for getting each artist’s first 100 music releases:

@django_query_analyze

def get_artists_and_releases():

result = []

artists = Artist.objects.all()[:100]

for artist in artists:

result.append(

{

"name": artist.name,

"releases": [release.title for release in artist.music_releases.all()],

}

)

return result

How long does it take to fetch 100 artists and 100 releases for each one of them?

ran function get_artists_and_releases

--------------------

number of queries: 101

Time of execution: 0.18245s

Let’s change the artists variable in this function and add select_related so we can bring the number of queries down and hopefully get a speed boost:

artists = Artist.objects.select_related("music_releases")

If you actually do that, you’ll get an error:

django.core.exceptions.FieldError: Invalid field name(s) given in select_related: 'music_releases'. Choices are: label

That’s because select_related can only be used to cache ForeignKey or OneToOneField attributes. The relationship between Artist and MusicRelease is many-to-many though, and that’s where prefetch_ related comes in:

@django_query_analyze

def get_artists_and_releases_prefetch_related():

result = []

artists = Artist.objects.all()[:100].prefetch_related("music_releases") # prefetch_related

for artist in artists:

result.append(

{

"name": artist.name,

"releases": [rel.title for rel in artist.music_releases.all()],

}

)

return result

select_related can only cache the “one” side of the “one-to-many” relationship, or either side of a “one-to-one” relationship. You can use prefetch_related for all other caching, including the many side in one-to-many relationships, and many-to-many relationships. Here’s the improvement in our example:

ran function get_artists_and_releases_prefetch_related

--------------------

number of queries: 2

Time of execution: 0.13239s

Nice.

Things to keep in mind about select_related and prefetch_related:

- If you aren’t pooling your database connections, the gains will be even bigger because of fewer roundtrips to the database.

- For very large result-sets, running

prefetch_relatedcan actually make things slower. - One database query isn’t necessarily faster than two or more.

Indexing

Indexing your database columns can have a big impact on query performance. Why then, is it not the first clause of this section? Because indexing is more complicated than simply scattering db_index=True on your model fields.

Creating an index on frequently accessed columns can improve the speed of look-ups pertaining to them. Indexing comes at the cost of additional writes and storage space though, so you should always measure your benefit:cost ratio. In general, creating indices on a table will slow down inserts/updates.

Take only what you need

When possible, use values() and especially values_list() to only pull the needed properties of your database objects. Continuing our example, if we only want to display a list of artist names and don’t need the full ORM objects, it’s usually better to write the query like so:

artist_names = Artist.objects.values('name')

# <QuerySet [{'name': 'Chet Faker'}, {'name': 'Billie Eilish'}]>

artist_names = Artist.objects.values_list('name')

# <QuerySet [('Chet Faker',), ('Billie Eilish',)]>

artist_names = Artist.objects.values_list('name', flat=True)

# <QuerySet ['Chet Faker', 'Billie Eilish']>

Haki Benita, a true database expert (unlike me), reviewed some parts of this section. You should read Haki’s blog.

Backend: the request layer

The next layer we’re going to look at is the request layer. These are your Django views, context processors, and middleware. Good decisions here will also lead to better performance.

Pagination

In the section about select_related we were using the function to return 500 artists and their labels. In many situations returning this many objects is either unrealistic or undesirable. The section about pagination in the Django docs is crystal clear on how to work with the Paginator object. Use it when you don’t want to return more than N objects to the user, or when doing so makes your web-app too slow.

Asynchronous execution/background tasks

There are times when a certain action inevitably takes a lot of time. For example, a user requests to export a big number of objects from the database to an XML file. If we’re doing everything in the same process, the flow looks like this:

web: user requests file -> process file -> return response

Say it takes 45 seconds to process this file. You’re not really going to let the user wait all this time for a response. First, because it’s a horrible experience from a UX standpoint, and second, because some hosts will actually cut the process short if your app doesn’t respond with a proper HTTP response after N seconds.

In most cases, the sensible thing to do here is to remove this functionality from the request-response loop and relay it to a different process:

web: user requests file -> delegate to another process -> return response

|

v

background process: receive job -> process file -> notify user

Background tasks are beyond the scope of this article but if you’ve ever needed to do something like the above I’m sure you’ve heard of libraries like Celery.

Compressing Django’s HTTP responses

This is not to be confused with static-file compression, which is mentioned later in the article.

Compressing Django’s HTTP/JSON responses also stands to save your users some latency. How much exactly? Let’s check the number of bytes in our response’s body without any compression:

Content-Length: 66980

Content-Type: text/html; charset=utf-8

So our HTTP response is around 67KB. Can we do better? Many use Django’s built-in GZipMiddleware for gzip compression, but today the newer and more effective brotli enjoys the same support across browsers (except IE11, of course).

Important: Compression can potentially open your website to security breaches, as mentioned in the GZipMiddleware section of the Django docs.

Let’s install the excellent django-compression-middleware library. It will choose the fastest compression mechanism supported by the browser by checking the request’s Accept-Encoding headers:

pip install django-compression-middleware

Include it in our Django app’s middleware:

MIDDLEWARE = [

"django.middleware.security.SecurityMiddleware",

"django.contrib.sessions.middleware.SessionMiddleware",

"django.contrib.auth.middleware.AuthenticationMiddleware",

"compression_middleware.middleware.CompressionMiddleware",

# ...

]

And inspect the body’s Content-Length again:

Content-Encoding: br

Content-Length: 7239

Content-Type: text/html; charset=utf-8

The body size is now 7.24KB, 89% smaller. You can certainly argue this kind of operation should be delegated to a dedicated server like Ngnix or Apache. I’d argue that everything is a balance between simplicity and resources.

Caching

Caching is the process of storing the result of a certain calculation for faster future retrieval. Django has an excellent caching framework that lets you do this on a variety of levels and using different storage backends.

Caching can be tricky in data-driven apps: you’d never want to cache a page that’s supposed to display up-to-date, realtime information at all times. So, the big challenge isn’t so much setting up caching as it is figuring out what should be cached, for how long, and understanding when or how the cache is invalidated.

Before resorting to caching, make sure you’ve made proper optimizations at the database-level and/or on the frontend. If designed and queried properly, databases are ridiculously fast at pulling out relevant information at scale.

Frontend: where it gets hairier

Reducing static files/assets sizes can significantly speed up your web application. Even if you’ve done everything right on the backend, serving your images, CSS, and JavaScript files inefficiently will degrade your application’s speed.

Between compiling, minifying, compressing, and purging, it’s easy to get lost. Let’s try not to.

Serving static-files

You have several options on where and how to serve static files. Django’s docs mention a dedicated server running Ngnix and Apache, Cloud/CDN, or the same-server approach.

I’ve gone with a bit of a hybrid attitude: images are served from a CDN, large file-uploads go to S3, but all serving and handling of other static assets (CSS, JavaScript, etc…) is done using WhiteNoise (covered in-detail later).

Vocabulary

Just to make sure we’re on the same page, here’s what I mean when I say:

- Compiling: If you’re using SCSS for your stylesheets, you’ll first have to compile those to CSS because browsers don’t understand SCSS.

- Minifying: reducing whitespace and removing comments from CSS and JS files can have a significant impact on their size. Sometimes this process involves uglifying: the renaming of long variable names to shorter ones, etc…

- Compressing/Combining: for CSS and JS, combining multiple files to one. For images, usually means removing some data from images to make their files size smaller.

- Purging: remove unneeded/unused code. In CSS for example: removing selectors that aren’t used.

Serving static files from Django with WhiteNoise

WhiteNoise allows your Python web-application to serve static assets on its own. As its author states, it comes in when other options like Nginx/Apache are unavailable or undesired.

Let’s install it:

pip install whitenoise[brotli]

Before enabling WhiteNoise, make sure your STATIC_ROOT is defined in settings.py:

STATIC_ROOT = os.path.join(BASE_DIR, "staticfiles")

To enable WhiteNoise, add its WhiteNoise middleware right below SecurityMiddleware in settings.py:

MIDDLEWARE = [

'django.middleware.security.SecurityMiddleware',

'whitenoise.middleware.WhiteNoiseMiddleware',

# ...

]

In production, you’ll have to run manage.py collectstatic for WhiteNoise to work.

While this step is not mandatory, it’s strongly advised to add caching and compression:

STATICFILES_STORAGE = 'whitenoise.storage.CompressedManifestStaticFilesStorage'

Now whenever it encounters a {% static %} tag in templates, WhiteNoise will take care of compressing and caching the file for you. It also takes care of cache-invalidation.

One more important step: To ensure that we get a consistent experience between development and production environments, we add runserver_nostatic:

INSTALLED_APPS = [

'whitenoise.runserver_nostatic',

'django.contrib.staticfiles',

# ...

]

This can be added regardless of whether DEBUG is True or not, because you don’t usually run Django via runserver in production.

I found it useful to also increase the caching time:

# Whitenoise cache policy

WHITENOISE_MAX_AGE = 31536000 if not DEBUG else 0 # 1 year

Wouldn’t this cause problems with cache-invalidation? No, because WhiteNoise creates versioned files when you run collectstatic:

<link rel="stylesheet" href="/static/CACHE/css/4abd0e4b71df.css" type="text/css" media="all">

So when you deploy your application again, your static files are overwritten and will have a different name, thus the previous cache becomes irrelevant.

Compressing and combining with django-compressor

WhiteNoise already compresses static files, so django-compressor is optional. But the latter offers an additional enhancement: combining the files. To use compressor with WhiteNoise we have to take a few extra steps.

Let’s say the user loads an HTML document that links three .css files:

<head>

<link rel="stylesheet" href="base.css" type="text/css" media="all">

<link rel="stylesheet" href="additions.css" type="text/css" media="all">

<link rel="stylesheet" href="new_components.css" type="text/css" media="all">

</head>

Your browser will make three different requests to these locations. In many scenarios it’s more effective to combine these different files when deploying, and django-compressor does that with its {% compress css %} template tag:

This:

{% load compress %}

<head>

{% compress css %}

<link rel="stylesheet" href="base.css" type="text/css" media="all">

<link rel="stylesheet" href="additions.css" type="text/css" media="all">

<link rel="stylesheet" href="new_components.css" type="text/css" media="all">

{% compress css %}

</head>

Becomes:

<head>

<link rel="stylesheet" href="combined.css" type="text/css" media="all">

</head>

Let’s go over the steps to make django-compressor and WhiteNoise play well. Install:

pip install django_compressor

Tell compressor where to look for static files:

COMPRESS_STORAGE = "compressor.storage.GzipCompressorFileStorage"

COMPRESS_ROOT = os.path.abspath(STATIC_ROOT)

Because of the way these two libraries intercept the request-response cycle, they’re incompatible with their default configurations. We can overcome this by modifying some settings.

I prefer to use environment variables in .env files and have one Django settings.py, but if you have settings/dev.py and settings/prod.py, you’ll know how to convert these values:

main_project/settings.py:

from decouple import config

#...

COMPRESS_ENABLED = config("COMPRESS_ENABLED", cast=bool)

COMPRESS_OFFLINE = config("COMPRESS_OFFLINE", cast=bool)

COMPRESS_OFFLINE is True in production and False in development. COMPRESS_ENABLED is True in both

.

With offline compression, one must run manage.py compress on every deployment. On Heroku, you’ll want to disable the platform from automatically running collectstatic for you (on by default) and instead opt to do that in the post_compile hook, which Heroku will run when you deploy. If you don’t already have one, create a folder called bin at the root of your project and inside of it a file called post_compile with the following:

python manage.py collectstatic --noinput

python manage.py compress --force

python manage.py collectstatic --noinput

Another nice thing about compressor is that it can compress SCSS/SASS files:

COMPRESS_PRECOMPILERS = (

("text/x-sass", "django_libsass.SassCompiler"),

("text/x-scss", "django_libsass.SassCompiler"),

)

Minifying CSS & JS

Another important thing to apply when talking about load-times and bandwidth usage is minifying: the process of (automatically) decreasing your code’s file-size by eliminating whitespace and removing comments.

There are several approaches to take here, but if you’re using django-compressor specifically, you get that for free as well. You just need to add the following (or any other filters compressor supports) to your settings.py file:

COMPRESS_FILTERS = {

"css": [

"compressor.filters.css_default.CssAbsoluteFilter",

"compressor.filters.cssmin.rCSSMinFilter",

],

"js": ["compressor.filters.jsmin.JSMinFilter"],

}

Defer-loading JavaScript

Another thing that contributes to slower performance is loading external scripts. The gist of it is that browsers will try to fetch and execute JavaScript files in the <head> tag as they are encountered and before parsing the rest of the page:

<html>

<head>

<script src="https://will-block.js"></script>

<script src="https://will-also-block.js"></script>

</head>

</html>

We can use the async and defer keywords to mitigate this:

<html>

<head>

<script async src="somelib.somecdn.js"></script>

</head>

</html>

async and defer both allow the script to be fetched asynchronously without blocking. One of the key differences between them is when the script is allowed to execute: With async, once the script has been downloaded, all parsing is paused until the script has finished executing, while with defer the script is executed only after all HTML has been parsed.

I suggest referring to Flavio Copes’ article on the defer and aysnc keywords. Its general conclusion is:

The best thing to do to speed up your page loading when using scripts is to put them in the

head, and add adeferattribute to yourscripttag.

Lazy-loading images

Lazily loading images means that we only request them when or a little before they enter the client’s (user’s) viewport. It saves time and bandwidth ($ on cellular networks) for your users. With excellent, dependency-free JavaScript libraries like LazyLoad, there really isn’t an excuse to not lazy-load images. Moreover, Google Chrome natively supports the lazy attribute since version 76.

Using the aforementioned LazyLoad is fairly simple and the library is very customizable. In my own app, I want it to apply on images only if they have a lazy class, and start loading an image 300 pixels before it enters the viewport:

$(document).ready(function (e) {

new LazyLoad({

elements_selector: ".lazy", // classes to apply to

threshold: 300 // pixel threshold

})

})

Now let’s try it with an existing image:

<img class="album-artwork" alt="{{ album.title }}" src="{{ album.image_url }}">

We replace the src attribute with data-src and add lazy to the class attribute:

<img class="album-artwork lazy" alt="{{ album.title }}" data-src="{{ album.image_url }}">

Now the client will request this image when the latter is 300 pixels under the viewport.

If you have many images on certain pages, using lazy-loading will dramatically improve your load times.

Optimize & dynamically scale images

Another thing to consider is image-optimization. Beyond compression, there are two more techniques to consider here.

First, file-format optimization. There are newer formats like WebP that are presumably 25-30% smaller than your average JPEG image at the same quality. As of 02/2020 WebP has decent but incomplete browser support, so you’ll have to provide a standard format fallback if you want to use it.

Second, serving different image-sizes to different screen sizes: if some mobile device has a maximum viewport width of 650px, then why serve it the same 1050px image you’re displaying to 13″ 2560px retina display?

Here, too, you can choose the level of granularity and customization that suits your app. For simpler cases, You can use the srcset attribute to control sizing and be done at that, but if for example you’re also serving WebP with JPEG fallbacks for the same image, you may use the <picture> element with multiple sources and source-sets.

If the above sounds complicated for you as it does for me, this guide should help explain the terminology and use-cases.

Unused CSS: Removing imports

If you’re using a CSS framework like Bootstrap, don’t just include all of its components blindly. In fact, I would start with commenting out all of the non-essential components and only add those gradually as the need arises. Here’s a snippet of my bootstrap.scss, where all of its different parts are imported:

// ...

// Components

// ...

@import "bootstrap/dropdowns";

@import "bootstrap/button-groups";

@import "bootstrap/input-groups";

@import "bootstrap/navbar";

// @import "bootstrap/breadcrumbs";

// @import "bootstrap/badges";

// @import "bootstrap/jumbotron";

// Components w/ JavaScript

@import "bootstrap/modals";

@import "bootstrap/tooltip";

@import "bootstrap/popovers";

// @import "bootstrap/carousel";

I don’t use things like badges or jumbotron so I can safely comment those out.

Unused CSS: Purging CSS with PurgeCSS

A more aggressive and more complicated approach is using a library like PurgeCSS, which analyzes your files, detects CSS content that’s not in use, and removes it. PurgeCSS is an NPM package, so if you’re hosting Django on Heroku, you’ll need to install the Node.js buildpack side-by-side with your Python one.

Conclusion

I hope you’ve found at least one area where you can make your Django app faster. If you have any questions, suggestions, or feedback don’t hesitate to drop me a line on Twitter.

Appendices

Decorator used for QuerySet performance analysis

Below is the code for the django_query_analyze decorator:

from timeit import default_timer as timer

from django.db import connection, reset_queries

def django_query_analyze(func):

"""decorator to perform analysis on Django queries"""

def wrapper(*args, **kwargs):

avs = []

query_counts = []

for _ in range(20):

reset_queries()

start = timer()

func(*args, **kwargs)

end = timer()

avs.append(end - start)

query_counts.append(len(connection.queries))

reset_queries()

print()

print(f"ran function {func.__name__}")

print(f"-" * 20)

print(f"number of queries: {int(sum(query_counts) / len(query_counts))}")

print(f"Time of execution: {float(format(min(avs), '.5f'))}s")

print()

return func(*args, **kwargs)

return wrapper

Ubuntu 18 解决out of memory 问题

报错信息:

程序报错:

#程序报错

MemoryError: Unable to allocate 22.4 GiB for an array with shape (54773, 54773) and data type float64

#写入系统日志错误

$ egrep -i 'killed process' /var/log/syslog

Feb 29 23:55:12 iZbp1dfqh2kwqx54njldloZ kernel: [276605.773003] Killed process 9136 (python) total-vm:8352516kB, anon-rss:3838288kB, file-rss:4kB, shmem-rss:0kB

出现原因

使用cosine_similarity(count_matrix, count_matrix)来计算相似性,此时的count_matrix在54773大小,需要组成一个54773 * 54773 * float64大小的向量,需要的内存空间为22.4GB。

但是阿里云购买的服务器只有4v4G,一共的运存只有4G,会造成out of memory溢出。

溢出之后Linux的OOM killer(Out-Of-Memory killer) 会自动的将溢出的进程杀死,所以会直接kill掉该python进行,无法继续执行。

解决步骤1:添加虚拟内存

内存不足的问题,最好的办法是直接升级服务器配置,升为32G的内存的服务器,但是这样所需要的钱还是不少的,特别是按年购买的服务器,没法临时升级。想到最好的方法便是提升虚拟内存,使用硬盘来协助计算。(在需要实时性的环境上还是尽量升级内存)

开始

我这里虚拟了 30G 的容量进行内存扩容(主要是磁盘也不便宜啊),然后 swap 使用的利用率比例为 60,即:当物理内存剩下 60% 时使用 swap 进行交换。

临时配置

临时配置是指重启之后会失效,仅仅只是保持本次开机起作用。

分配文件空间, 建立一个 30G 的 swap 所需的文件空间

dd if=/dev/zero of=/var/blockd.swap bs=1M count=32768

文件 Swap 格式化

mkswap /var/blockd.swap

Swap 激活

swapon /var/blockd.swap

Swap 挂载

打开 /etc/fstab 文件编辑追加以下内容

/var/blockd.swap swap swap default 0 0

修改 Swap 利用率

sysctl vm.swappiness=60

挂载生效

mount -a

永久配置

永久配置是指重启之后依然保持生效。

分配文件空间

建立一个 30G 的 swap 所需的文件空间

dd if=/dev/zero of=/var/blockd.swap bs=1M count=2048

文件 Swap 格式化

mkswap /var/blockd.swap

Swap 设置自激活

由于 /etc/rc.local 文件会优先于 /etc/fstab 执行,所以在文件 /etc/rc.local 里面增加下面一行命令

swapon /var/blockd.swap

Swap 挂载

打开 /etc/fstab 文件编辑追加以下内容

/var/blockd.swap swap swap default 0 0

修改 Swap 利用率

编辑 /etc/sysctl.conf 实现永久生效

vm.swappiness=60

重启生效

更多命令

Swap 查看

swapon -s

Swap 关闭

swapoff /var/blockd.swap

查看 Swap 利用率

cat /proc/sys/vm/swappiness

参数解释

vm.swappiness

这个参数主要用来表示物理内存还剩多大比例才开始使用内存交换,本文中设置的值为 60 即当物理内存还剩 60% 时开始进行内存交换;这里有一篇英文相关解释:https://askubuntu.com/questions/969065/why-is-swap-being-used-when-vm-swappiness-is-0

最后

关于为什么阿里云的 ECS 关闭了 Swap ,网上很多观点均是因为阿里云为了保护磁盘而默认进行了关闭(其实交换空间频繁读写实际就是对硬盘的操作),反正我们实现我们想要的就可以了,至于损耗嘛就是官方需要考虑的问题了;关于性能的话根据阿里云的磁盘读写速度文档表明 高效云盘 能够达到 130m/s 的读写速度,比老式机械 70m/s 高了不少,凑合着用吧,如果不满于高效云盘的可以考虑 SSD 那这样的话价格也会不同,自己做一下价格对比吧!!!

解决步骤二:关闭OOM Killer

由于是OOM killer,所以需要关闭OOM Killer 来保证程序不被杀死

sysctl -w vm.overcommit_memory=2

固定:

修改/etc/sysctl.conf文件

添加一行

vm.overcommit_memory=2

注意一定要在扩展了虚拟内存之后再添加这个配置,否则的话,服务器会内存溢出周后hang机。

参考文章:

SnippetsLab & github gist & Lepton

gist主要用来管理一些基本的代码片段,比起github上面的项目每次要提交全工程更加轻量和简单。很多时候我们的很多代码片段是可以复用的,这些实际上也跟抽象相关。特别是脚本语言,比如javascript或者python之类,很多时候很少的修改就可以解决其他的问题。

gist的优势

- 每个Gist都是一个Git库,有版本历史,可以被fork或clone

- Gist有两种:公开的和私有的,私有的不会在你的Gist主页显示,也无法用搜索引擎搜索到,但这个链接是人人都能访问的

- Gist可以搜索、下载、嵌入到网页。其中嵌入网页的功能还是不错的

- 有很多时候我们只是想记录代码的一部分,没有必要把一堆的其他代码提交到网上去

- 可以用gist保存一个有历史记录的长期更新的列表清单(知识点、知识迭代等)

- 记录简短的想法或总结:有时候想总结一些技术或经验,或者有一些想法,由于内容比较短,还不足以发表博客,可以先记录下来

使用SnippetsLab来管理Github Gist的好处

- SnippetsLab支持的代码高亮比一般的文本编辑器多很多

- 同步到gist之后,可以保证代码的同步

gist的使用可以参考

Gist使用经验

observablehq

可以用这个应用为gist写独立的网页,方便展示,同时很多javascript代码可以展示最后的结构,前端开发的福音。

如果是纯前端代码,做好之后可以使用这个来发布,不需要在发布一个新的网页了

gist和SnippetsLab相关的一个开源electron软件

我的使用经验

- 使用SnippetsLab来管理代码片段,更专注的代码管理

- 主要用于管理javascript和python的代码片段

- 将经常要更新和使用的list来用snippets来管理,毕竟snippets里面的文件更少,文档模式更方便管理,同时发送到gist会更加方便。

- Lepton是开源的Gist客户端,可以针对需要进行修改相应的代码

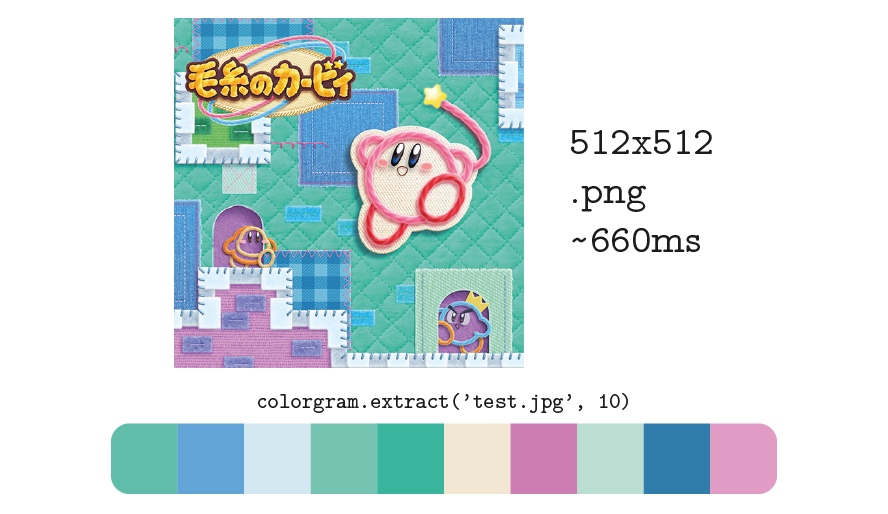

图片颜色画板抓取

color-thief-py

分装的很好,可以直接安装使用,提供api非常方便

colorfic

这个也不错啊

Text2Colors

这个非常有意思,一定要研究一下

后面可以多研究一点这种类型的应用。集成到平台中去

rayleigh

使用颜色搜图

colorgram.py

可以取色

paintingReorganize

Use PCA analysis to reorganize the pixels of a painting into a smooth color palette.

颜色的分析

https://github.com/athoune/Palette

https://github.com/fundevogel/we-love-colors

https://github.com/tody411/PaletteSelection

瀑布流

Waterfall

宽度自适应瀑布流

https://myst729.github.io/Waterfall/

还可以,做成了异步加载

waterfall

原生 JavaScript 实现的瀑布流效果,兼容到 IE8。

Stick

stick是什么 stick是一个响应式的瀑布流组件。

weibocard

Columnizer-jQuery-Plugin

column layout

列式布局

ftcolumnflow

文字的流

查询关键字 column grid

一种比较神奇的排序算法

可以研究研究的神奇的排序算法

这个一定要研究一下

elastic-columns

效果看上去不错,但是感觉实现比较复杂

columns.js

这个包需要好好研究一下

masonry

比较好用的包,代码也比较简单

比较推荐

bootstrap-waterfall

Bootstrap的

比较推荐

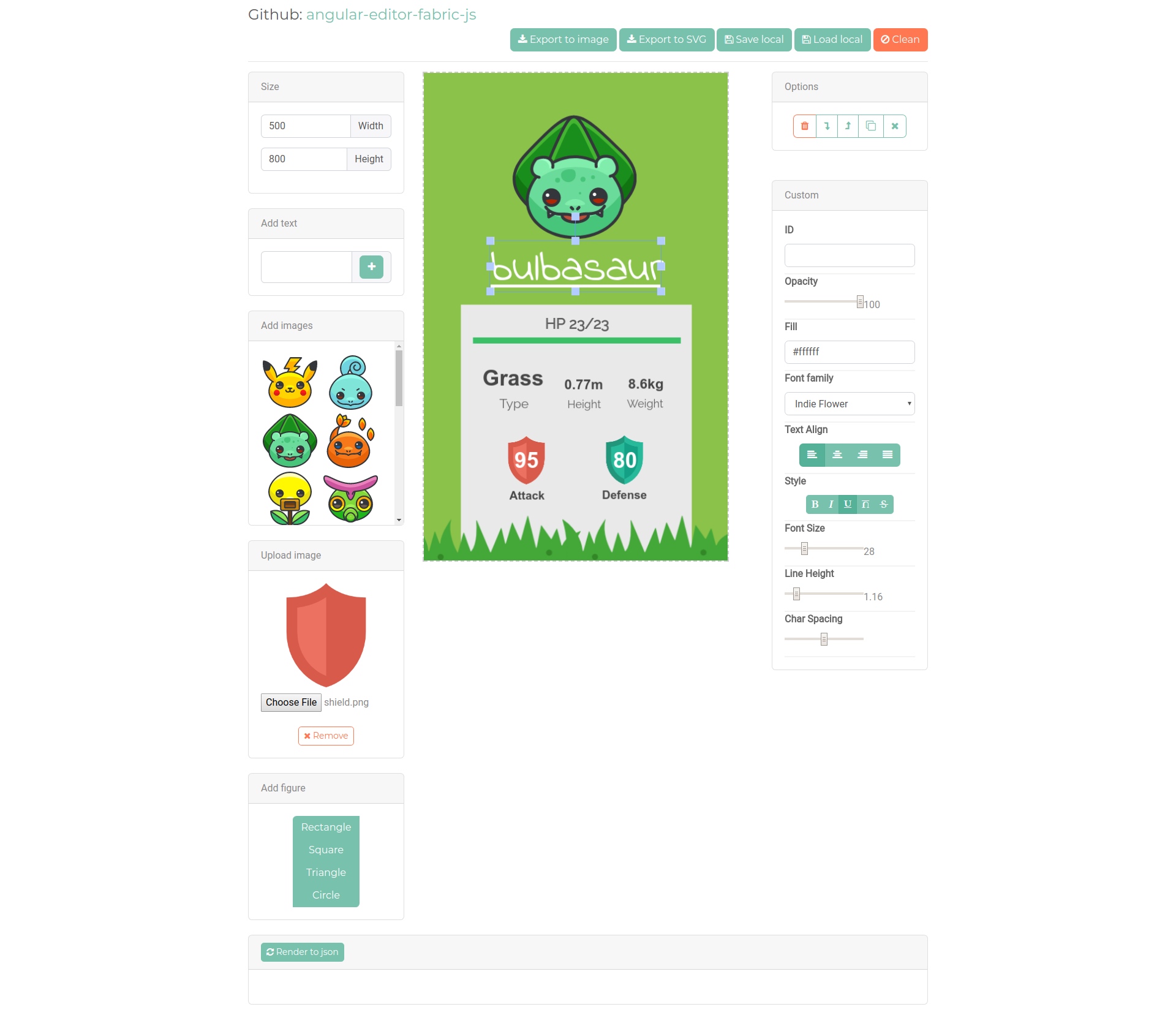

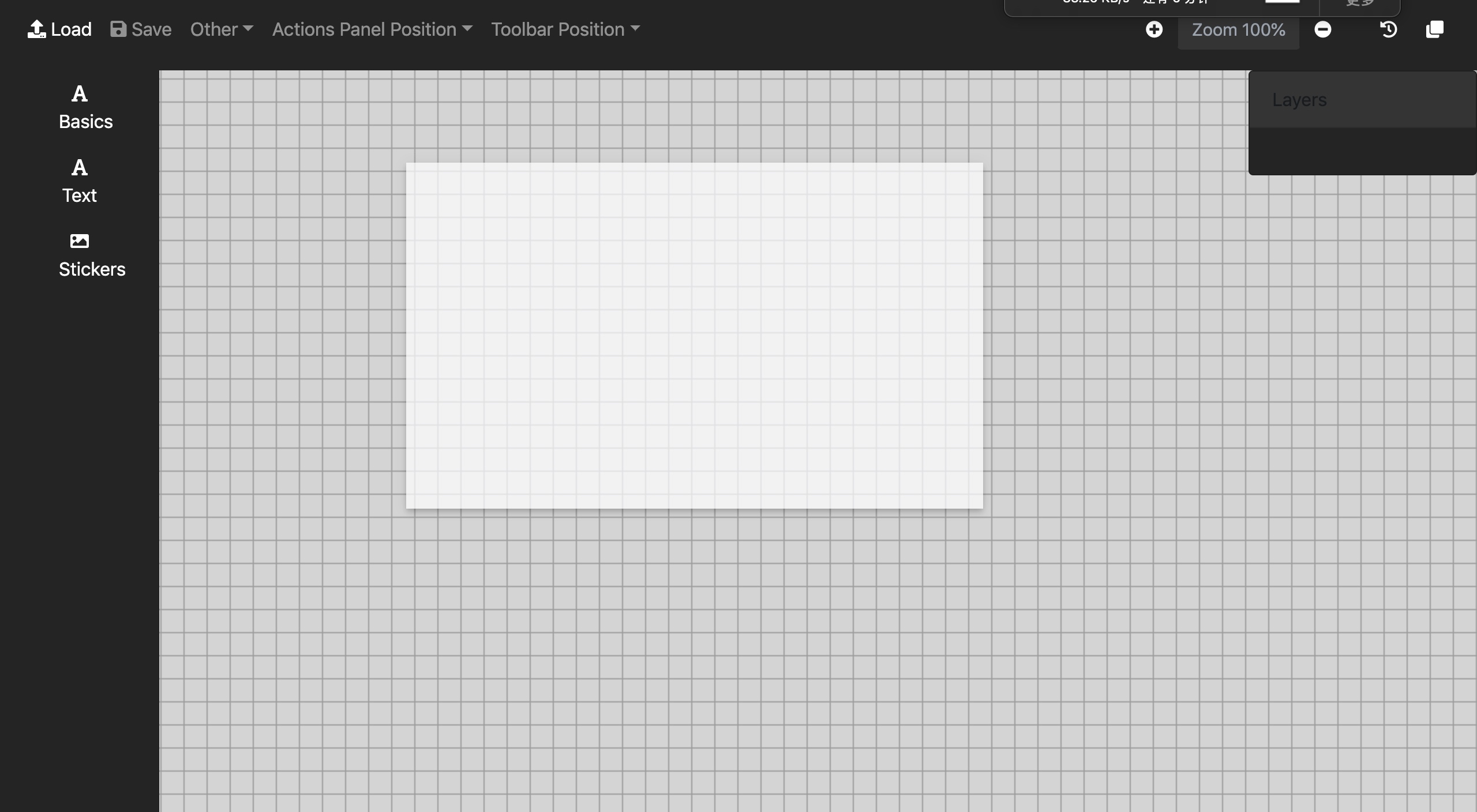

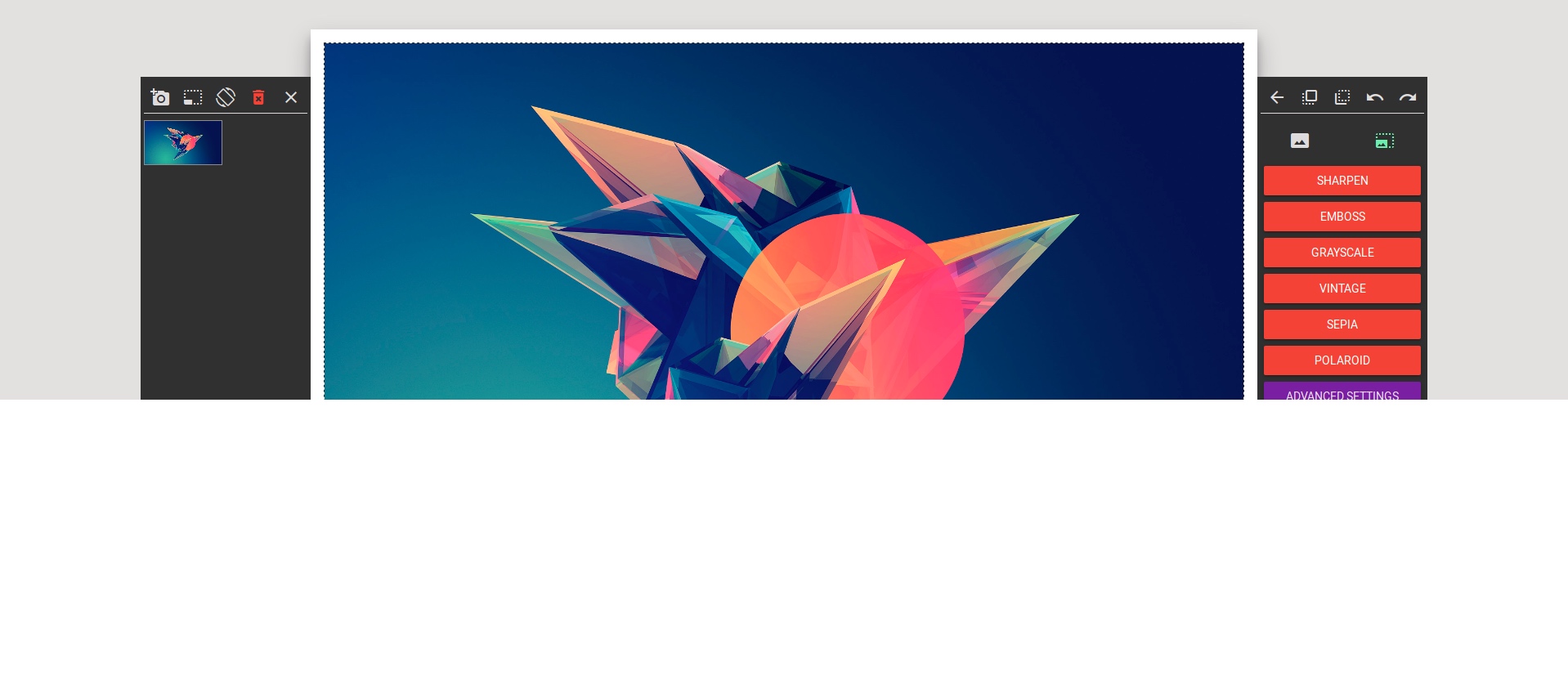

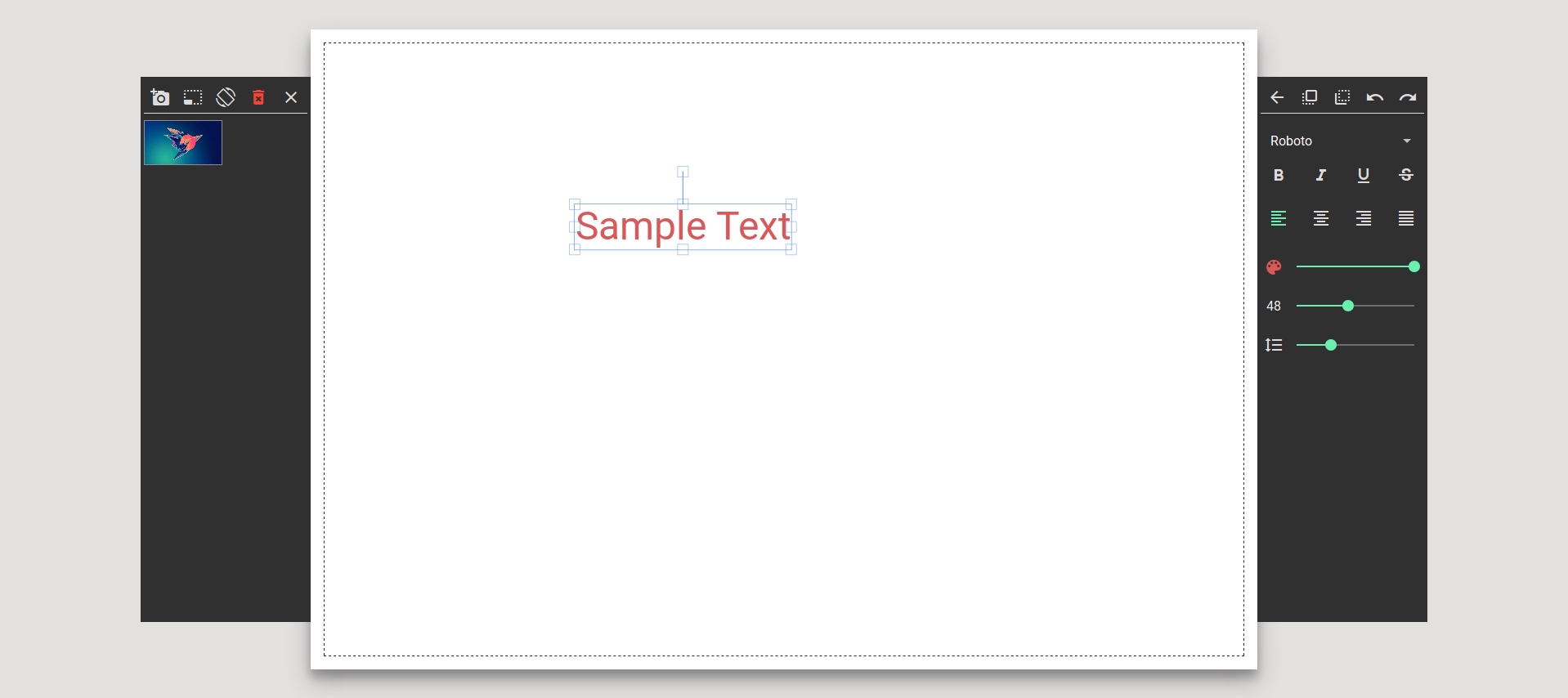

图片Editor分析 fabircjs 扩展包

angular-editor-fabric-js

非常好的使用fabric实现的angular带工具栏的内容

angular-fabric

这个也做的比较好,但是前段页面总是卡卡的,不知道是什么原因

react-fabricjs

react版本,但是没有看到demo

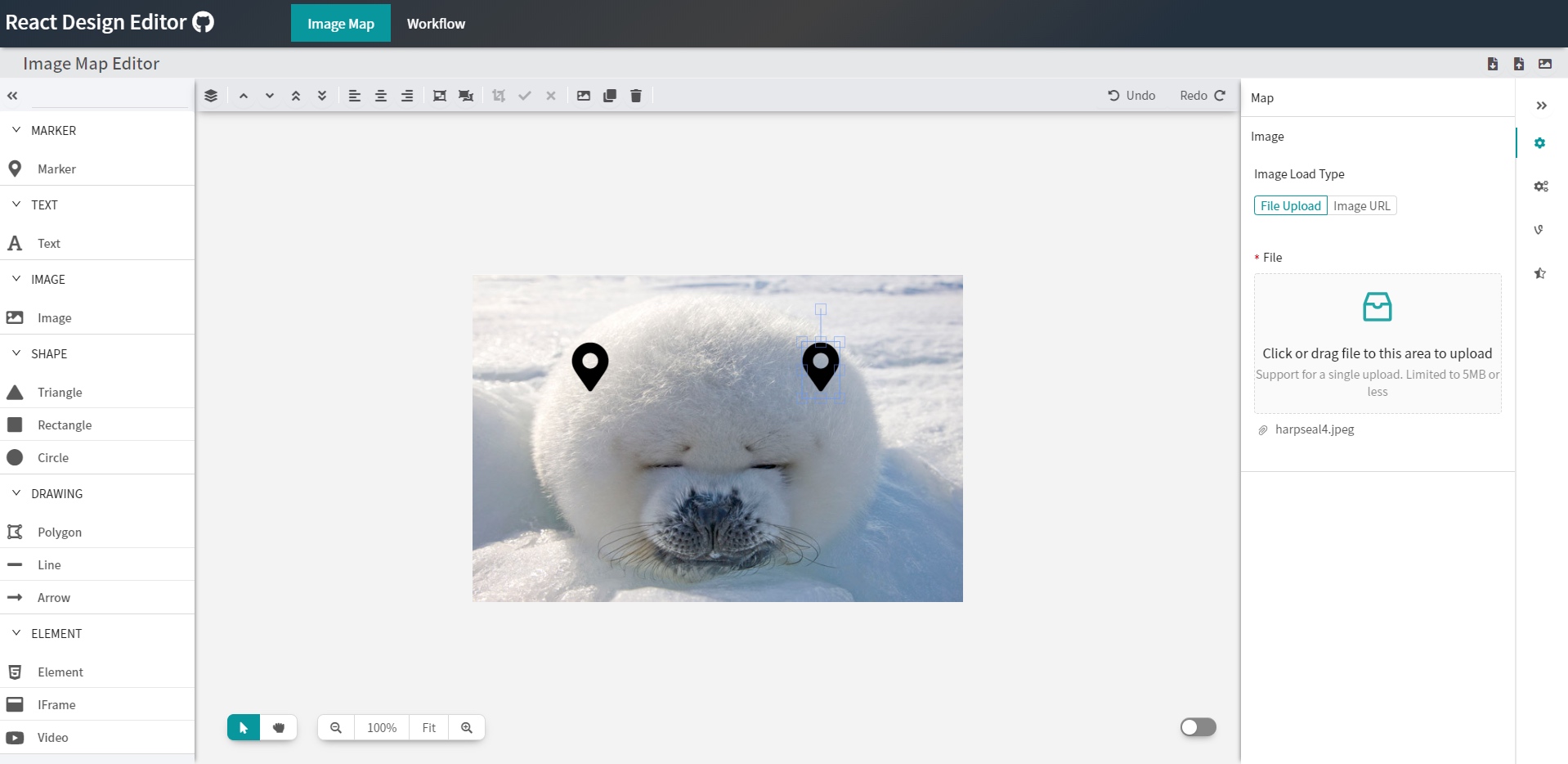

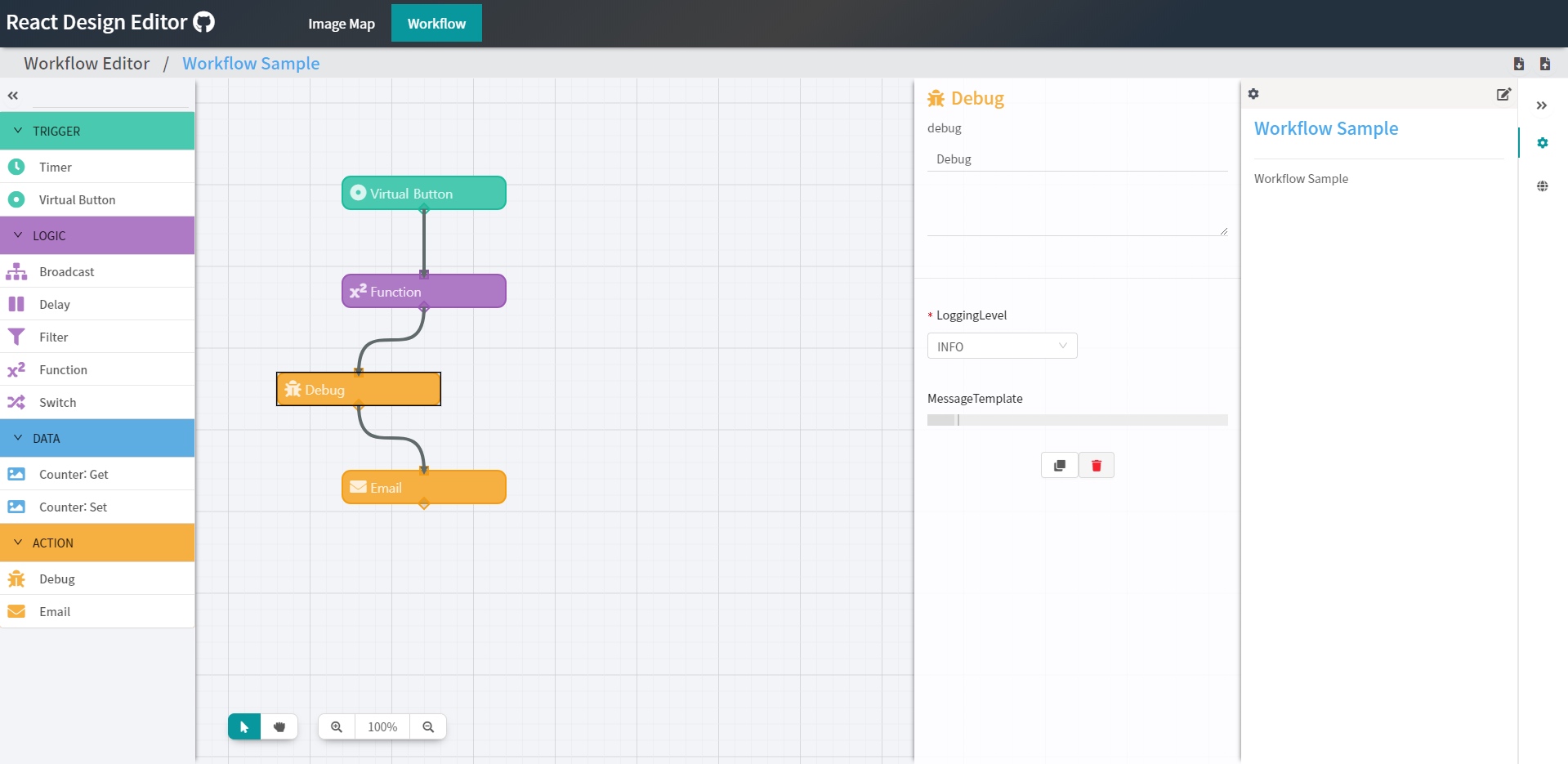

React Design Editor

非常好的基于react的可拖拽花瓣框架

vue-fabri

vue版本的fabric,没有截图

multi-draw

☁️ 基于Fabric.js+Socket.io的多人在线实时同步画板

demo挂了,看不到,可以跑起来看看

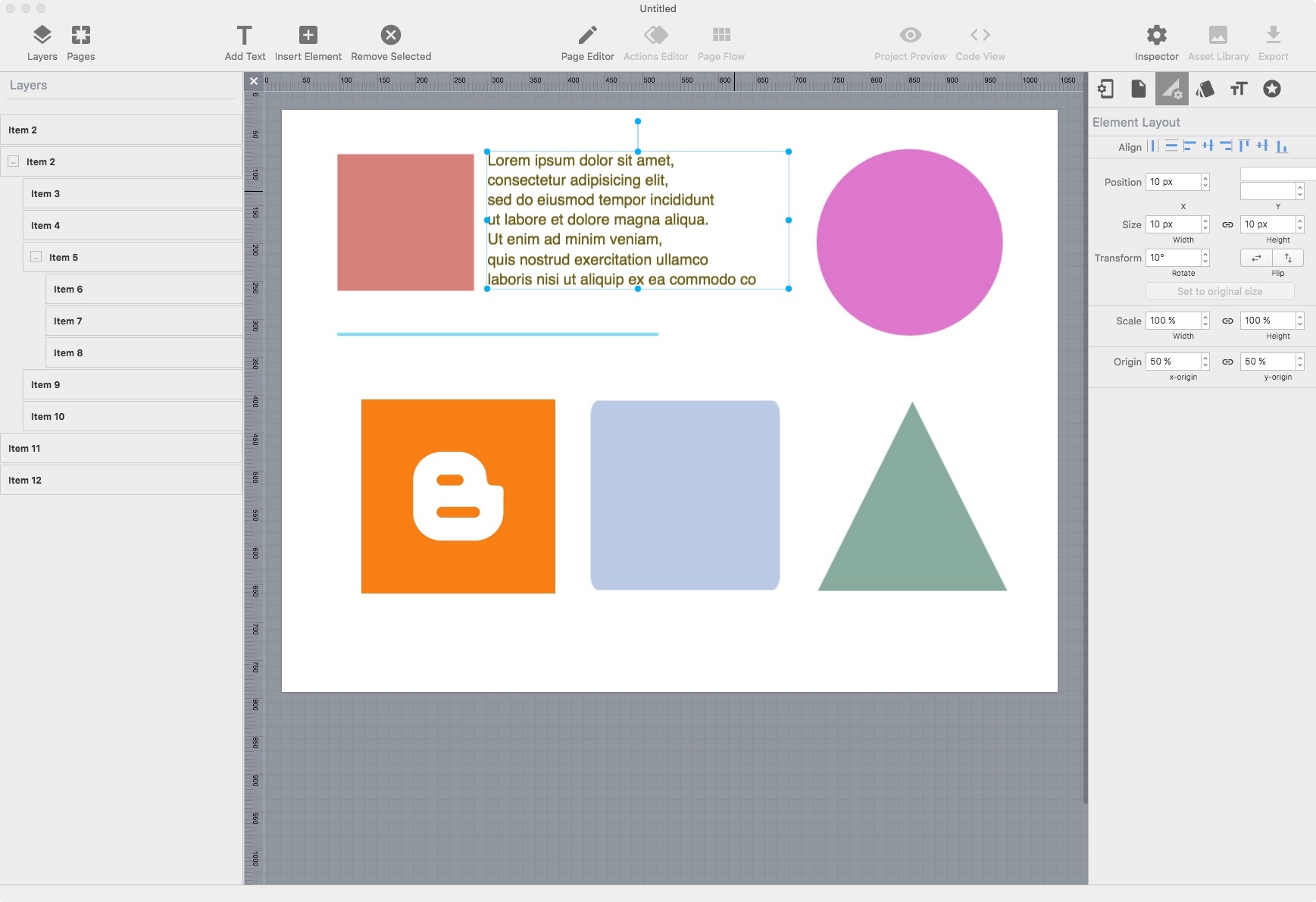

floido-designer

这个感觉非常靠谱。

还是用了electron,这样的好处是可以做成客户端了。体验会更好

vue-card-diy

手机版的自定义画板

react-sketch

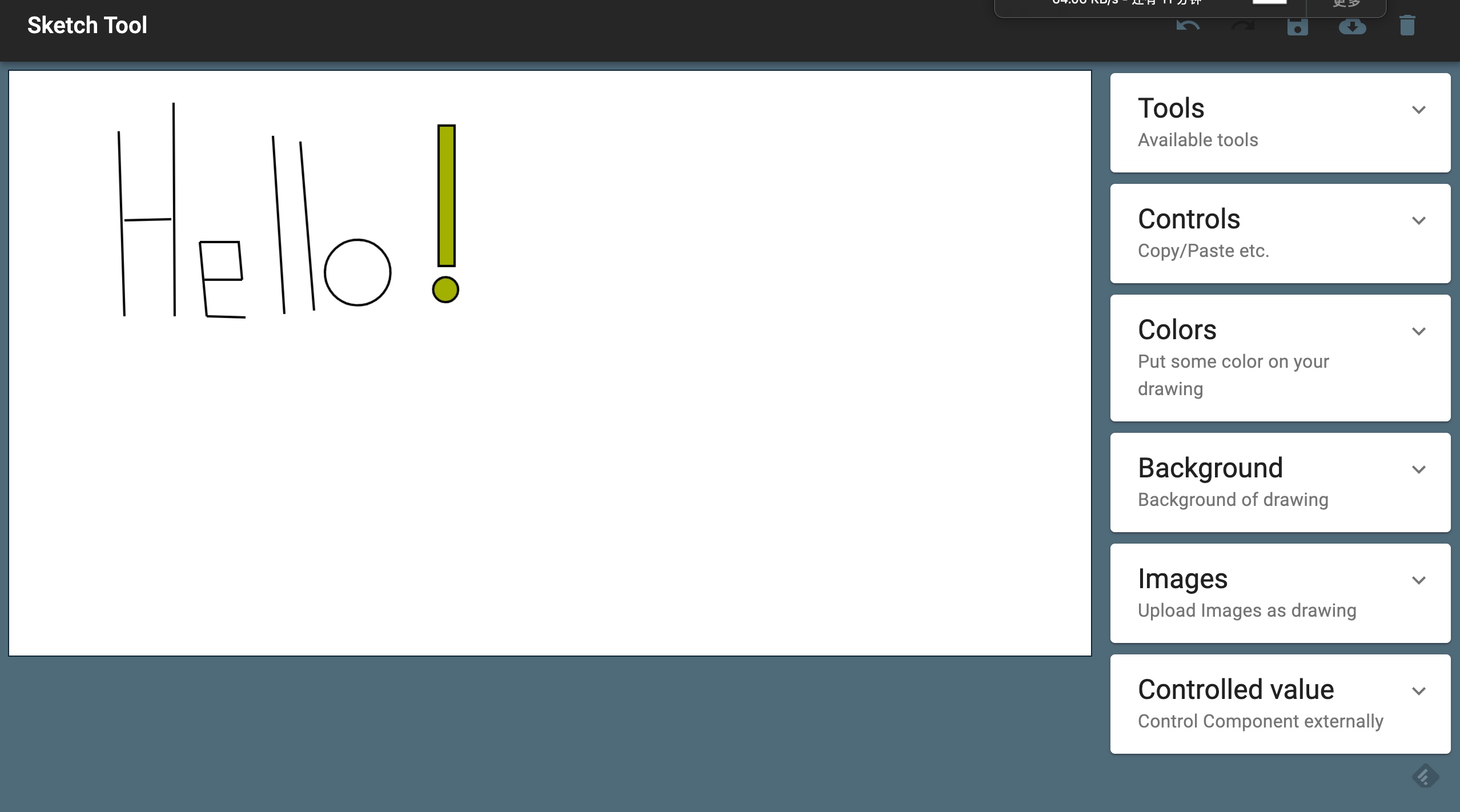

样子做得一般般,但是可以使用了,用来学习是不错的选择

pie-js

还比较不错

geckos

An online Card Editor with Templates http://gulix.github.io/geckos/

没运行起来,后面可以试试

基于canvas的高级画板程序.

全局绘制颜色选择

护眼模式、网格模式切换

自由绘制

画箭头

画直线

画虚线

画圆/椭圆/矩形/直角三角形/普通三角形/等边三角形

文字输入

图片展示及相关移动、缩放等操作

删除功能

支持画板同比缩放

支持图形即时显示

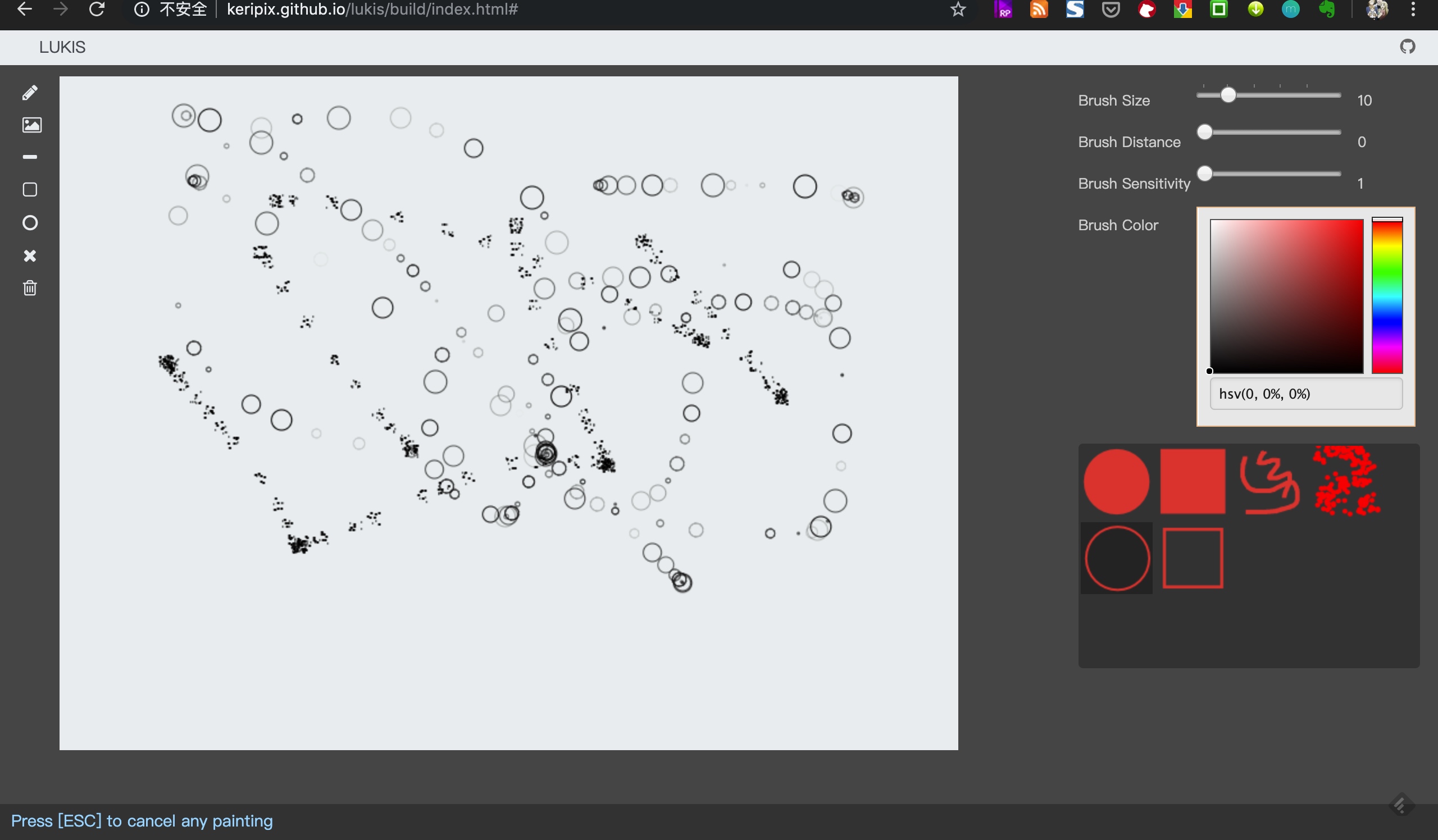

lukis

photo-chrome

A state of the art web based photo editor module made using angular v4

值得好好研究研究的项目

[fabricjs-pathfinding](https://github.com/kevoj/fabricjs-pathfinding)

可视化的走迷宫,非常好的demo例子

AngularJS & FabricJS - 2D - Diagram

A browser-based 2D diagram editor, built using AngularJS, AngularUI and Fabric.js. This project is built by [Big-Silver].

react-redux-fabricjs

Fabric.js with React/Redux

Copyright © 2020 鄂ICP备16010598号-1